Introducing Our User Guide

The world of mainstream computing is changing rapidly these days. If you open the case and look under the covers of your computer, you’ll most likely see a dual-core processor there, or a quad-core one, if you have a high-end computer. We all now run our software on multi-processor systems.

The code we write today and tomorrow will probably never run on a single processor system: parallel hardware has become standard. Not so with the software though, at least not yet. People still create single-threaded code, even though it will not be able to leverage the full power of current and future hardware.

| The code we write today will probably never run on a single processor system ! |

Some developers experiment with low-level concurrency primitives, like threads, and locks or synchronized blocks. However, it has become obvious that the shared-memory multi-threading approach used at the application level causes more trouble than it solves. Low-level concurrency handling is usually hard to get right, and it’s not much fun either.

With such a radical change in hardware, software inevitably has to change dramatically too. Higher-levelS OF concurrency and parallelism concepts like map/reduce, fork/join, actors and dataflow provide natural abstractions for different types of problem domains while leveraging the multi-core hardware.

Enter GPars

Meet GPars, an open-source concurrency and parallelism library for Java and Groovy that gives you a number of high-level abstractions for writing concurrent and parallel code in Groovy (map/reduce, fork/join, asynchronous closures, actors, agents, dataflow concurrency and other concepts), which can make your Java and Groovy code concurrent and/or parallel with little effort.

With GPars, your Java and/or Groovy code can easily utilize all the available processors on the target system. You can run multiple calculations at the same time, request network resources in parallel, safely solve hierarchical divide-and-conquer problems, perform functional style map/reduce or data parallel collection processing or build your applications around the actor or dataflow model.

If you’re working on a commercial, open-source, educational or any other type of software project in Groovy, download the binaries or integrate them from the Maven repository and get going. The door to writing highly concurrent and/or parallel Java and Groovy code is wide open. Enjoy!

Credits

This project could not have reached the point where it stands currently without all the great help and contributionS from many individuals, who have devoted their time, energy and expertise to make GPars a solid product. First, it’s the people in the core team who should be mentioned:

-

Václav Pech

-

Dierk Koenig

-

Alex Tkachman

-

Russel Winder

-

Paul King

-

Jon Kerridge

-

Rafał Sławik

Over time, many other people have contributed their ideas, provided useful feedback or helped GPars in one way or another. There are many people in this group, too many to name them all, but let’s list at least the most active:

-

Hamlet d’Arcy

-

Hans Dockter

-

Guillaume Laforge

-

Robert Fischer

-

Johannes Link

-

Graeme Rocher

-

Alex Miller

-

Jeff Gortatowsky

-

Jiří Kropáček

-

Jim Northrop

| Many thanks to everyone! |

User Guide To Getting Started

A Few Assumptions

Let’s set out a few assumptions before we start :

-

You know and use Groovy and/or Java : otherwise you’d not be investing your valuable time studying a concurrency and parallelism library for Groovy and/or Java.

-

You definitely want to write code employing concurrency and parallelism concepts.

-

If you are not using Groovy, you are prepared to pay the inevitable verbosity tax of using Java.

-

You target multi-core hardware with your code.

-

You appreciate that in concurrent and parallel code things can happen at any time, in any order, and, more likely, with more than one thing happening at once.

Ready ?

With those assumptions in place, we can get started.

It’s becoming more and more obvious that dealing with concurrency and parallelism at the thread/synchronized/lock level, as provided by the JVM, is far too low a level to be safe and comfortable.

Many high-level concepts, such as actors and dataflow have been around for quite some time. Parallel-chipped computers have been in use, at least in data centres if not on the desktop, long before multi-core chips hit the hardware mainstream.

So now is the time to adopt these higher-level abstractions into the mainstream software industry.

This is what GPars enables for the Groovy and Java languages, allowing them to use higher-level abstractions and, therefore, make development of concurrent and parallel software easier and less error prone.

The concepts available in GPars can be categorized into three groups:

-

Code-level helpers - Constructs that can be applied to small parts of your code-base, such as an individual algorithms or data structure, without any major changes in the overall project architecture

-

Parallel Collections

-

Asynchronous Processing

-

Fork/Join (Divide/Conquer)

-

-

Architecture-level concepts - Constructs that need to be taken into account when designing the project structure

-

Actors

-

Communicating Sequential Processes (CSP)

-

Dataflow

-

Data Parallelism

-

-

Shared Mutable State Protection - More than 95% of the current use of shared mutable states can be avoided using proper abstractions. Good abstractions are still necessary for the remaining 5% of those use cases, i.e. when shared mutable state cannot be avoided.

-

Agents

-

Software Transactional Memory (not fully implemented in GPars as yet)

-

Download and Install

GPars is now distributed as part of Groovy. So if you have a Groovy installation, you should already have GPars. Your exact version of GPars will, of course, depend on which version of Groovy you use.

If you don’t already have GPars, and you do have Groovy, then perhaps you should upgrade your Groovy !

| If you need it, you can download Groovy from here, and GPars from here. |

If you don’t have a Groovy installation, but use Groovy by using dependencies or perhaps, just having the groovy-all artifact, then you will need to get GPars. Also if you want to use a different version of GPars to the one bundled with Groovy, or have an old GPars-free Groovy that you cannot upgrade, you will need to get GPars. The ways to download GPars are:

-

Download the artifact from a repository and add it and all the transitive dependencies manually.

-

Specify a dependency in Gradle, Maven, or Ivy (or Gant, or Ant) build files.

-

Use Grapes (especially useful for Groovy scripts).

-

Download and install it from here.

If you’re building a Grails or a Griffon application, you can use the appropriate plugins to fetch our jar files for you.

The GPars Artifact

As noted above, GPars is now distributed as standard with Groovy. If however, you have to manage this dependency manually, the GPars artifact is in the main Maven repository and was in the Codehaus main and snapshots repositories before they closed.

Release versions can be found in the Maven main repositories but, for now, the current development version (SNAPSHOT) was in the Codehaus snapshots repository. We’re moving it to another location.

To use GPars from Gradle or Grapes, use the specification:

1

"org.codehaus.gpars:gpars:1.2.0"

You may need to add our snapshot repository manually to the search list in this latter case. Using Maven the dependency is:

1

2

3

4

5

<dependency>

<groupId>org.codehaus.gpars</groupId>

<artifactId>gpars</artifactId>

<version>1.2.0</version>

</dependency>

Transitive Dependencies

GPars as a library depends on Groovy versions later than 2.2.1. Also, the Fork/Join concurrency library must be available. This comes as standard with Java 7.

GPars 2.0 will depend on Java 8 and will only be usable with Groovy 3.0 and later.

Please visit the Integration page on our GPars website for more details.

A Hello World Example

Once you’re setup, try the following Groovy script to confirm your setup is functioning properly.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

import static groovyx.gpars.actor.Actors.actor

/**

* A demo showing two cooperating actors. The decryptor decrypts received messages

* and replies them back. The console actor sends a message to decrypt, prints out

* the reply and terminates both actors. The main thread waits on both actors to

* finish using the join() method to prevent premature exit, since both actors use

* the default actor group, which uses a daemon thread pool.

* @author Dierk Koenig, Vaclav Pech

*/

def decryptor = actor {

loop {

react { message ->

if (message instanceof String) reply message.reverse()

else stop()

}

}

}

def console = actor {

decryptor.send 'lellarap si yvoorG'

react {

println 'Decrypted message: ' + it

decryptor.send false

}

}

[decryptor, console]*.join()

You should receive a message "Decrypted message: Groovy is parallel" on the console.

To quick-test GPars with the Java API, compile and run the following Java code:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

import groovyx.gpars.MessagingRunnable;

import groovyx.gpars.actor.DynamicDispatchActor;

public class StatelessActorDemo {

public static void main(String[] args) throws InterruptedException {

final MyStatelessActor actor = new MyStatelessActor();

actor.start();

actor.send("Hello");

actor.sendAndWait(10);

actor.sendAndContinue(10.0, new MessagingRunnable<String>() {

@Override protected void doRun(final String s) {

System.out.println("Received a reply " + s);

}

});

}

}

class MyStatelessActor extends DynamicDispatchActor {

public void onMessage(final String msg) {

System.out.println("Received " + msg);

replyIfExists("Thank you");

}

public void onMessage(final Integer msg) {

System.out.println("Received a number " + msg);

replyIfExists("Thank you");

}

public void onMessage(final Object msg) {

System.out.println("Received an object " + msg);

replyIfExists("Thank you");

}

}

Remember though that you will almost certainly have to add the Groovy artifact to the build as well as the GPars artifact. GPars may well work at Java speeds with Java applications, but it still has some compilation dependencies on Groovy.

Code Conventions

We follow certain conventions in our code samples. Understanding these conventions may help you read and comprehend GPars code samples better.

-

The leftShift operator '<<' has been overloaded on actors, agents and dataflow expressions (both variables and streams) to mean send a message or assign a value.

myActor << 'message'

myAgent << {account -> account.add('5 USD')}

myDataflowVariable << 120332

-

On actors and agents, the default call() method has been also overloaded to mean send . So sending a message to an actor or agent may look like a regular method call.

myActor "message"

myAgent {house -> house.repair()}

-

The rightShift operator '>>' in GPars has the when bound meaning. So

myDataflowVariable >> {value -> doSomethingWith(value)}

will schedule the closure to run only after myDataflowVariable is bound to a value, with the value as a parameter.

Usage

In samples, we tend to statically import frequently used factory methods:

-

GParsPool.withPool()

-

GParsPool.withExistingPool()

-

GParsExecutorsPool.withPool()

-

GParsExecutorsPool.withExistingPool()

-

Actors.actor()

-

Actors.reactor()

-

Actors.fairReactor()

-

Actors.messageHandler()

-

Actors.fairMessageHandler()

-

Agent.agent()

-

Agent.fairAgent()

-

Dataflow.task()

-

Dataflow.operator()

It’s more a matter of style preferences and personal taste, but we think static imports make the code more compact and readable.

Getting Set Up In An IDE

Adding the GPars jar files to your project or defining the appropriate dependencies in pom.xml should be enough to get you started with GPars in your IDE.

GPars DSL recognition

IntelliJ IDEA in both the free Community Edition and the commercial Ultimate Edition will recognize the GPars domain specific languages, complete methods like eachParallel() , reduce() or callAsync() and validate them. GPars uses the Groovy DSL mechanism, which teaches IntelliJ IDEA the DSLs as soon as the GPars jar file is added to the project.

Applicability of Concepts

GPars provides a lot of concepts to pick from. We’re continuously building and updating our documents to help users choose the right level of abstraction for their tasks at hands. Please, refer to Concepts Compared for details.

To briefly summarize the suggestions, here are some basic guide-lines:

-

You’re looking at a collection, which needs to be iterated or processed using one of the many beautiful Groovy collection methods, like each() , collect() , find() etc.. Suppose that processing each element of the collection is independent of the other items, then using GPars parallel collections can be appropriate.

-

If you have a long-lasting calculation , which may safely run in the background, use the asynchronous invocation support in GPars. Since GPars asynchronous functions can be composed, you can quickly parallelize tyhese complex functional calculations without having to mark independent calculations explicitly.

-

Say you need to parallelize an algorithm. You can identify a set of tasks with their mutual dependencies. The tasks typically do not need to share data, but instead some tasks may need to wait for other tasks to finish before starting. Now you’re ready to express these dependencies explicitly in code. With GPars dataflow tasks, you create internally sequential tasks, each of which can run concurrently with the others. Dataflow variables and channels provide the tasks with the capability to declare their dependencies and to exchange data safely.

-

Perhaps you can’t avoid using shared mutable state in your logic. Multiple threads will be accessing shared data and (some of them) modifying it. A traditional locking and synchronized approach feels too risky or unfamiliar? Then go for agents to wrap your data and serialize all access to it.

-

You’re building a system with high concurrency demands. Tweaking a data structure here or task there won’t cut it. You need to build the architecture from the ground up with concurrency in mind. Message-passing might be the way to go. Your choices could include :

-

Groovy CSP to give you highly deterministic and composable models for concurrent processes. A model is organized around the concept of calculations or processes, which run concurrently and communicate through synchronous channels.

-

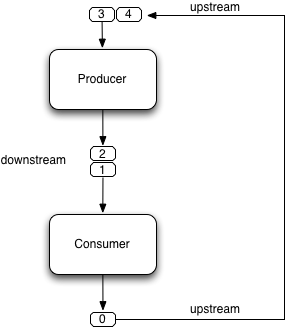

If you’re trying to solve a complex data-processing problem, consider GPars dataflow operators to build a data flow network. The concept is organized around event-driven transformations wired into pipelines using asynchronous channels.

-

Actors and Active Objects will shine if you need to build a general-purpose, highly concurrent and scalable architecture following the object-oriented paradigm.

-

Now you may have a better idea of what concepts to use on your current project. Go check out more details on them in our User Guide.

What’s New

The next GPars 1.3.0 release introduces several enhancements and improvements on top of the previous release, mainly in the dataflow area.

Check out the JIRA release notes.

Project Changes

Asynchronous Functions

TBD

Parallel Collections

TBD

Fork / Join

TBD

Actors

-

Remote actors

-

Exception propagation from active objects

Dataflow

-

Remote dataflow variables and channels

-

Dataflow operators accepting variable number arguments

-

Select made @CompileStatic compatible

Agent

-

Remote agents

STM

TBD

Other

-

Raised the JDK dependency to version 1.7

-

Raised the Groovy dependency to version 2.2

-

Replaced the jsr-177y fork-join pool implementation with the one from JDK 1.7

-

Removed the dependency on jsr-166y

Java API – Using GPars from Java

Using GPars is very addictive, I guarantee. Once you get hooked, you won’t be able to code without it. If the world forces you to write code in Java, you’ll still be able to benefit from many of the GPars features.

Java API specifics

Some parts of GPars are irrelevant in Java and it’s better to use the underlying Java libraries directly:

-

Parallel Collection – use jsr-166y library’s Parallel Array directly until GPars 1.3.0 becomes available

-

Fork/Join – use jsr-166y library’s Fork/Join support directly until GPars 1.3.0 becomes available

-

Asynchronous functions – use Java executor services directly

The other parts of GPars can be used from Java just as from Groovy, although most will miss the Groovy DSL capabilities.

GPars Closures in Java API

To overcome the lack of closures as a language element in Java and to avoid forcing users to use Groovy closures directly through the Java API, a few handy wrapper classes have been provided to help you define callbacks, actor body or dataflow tasks.

-

groovyx.gpars.MessagingRunnable - used for single-argument callbacks or actor body

-

groovyx.gpars.ReactorMessagingRunnable - used for ReactiveActor body

-

groovyx.gpars.DataflowMessagingRunnable - used for dataflow operators' body

These classes can be used in places where the GPars API expects a Groovy closure.

Actors

The DynamicDispatchActor as well as the ReactiveActor classes can be used just like in Groovy:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

import groovyx.gpars.MessagingRunnable;

import groovyx.gpars.actor.DynamicDispatchActor;

public class StatelessActorDemo {

public static void main(String[] args) throws InterruptedException {

final MyStatelessActor actor = new MyStatelessActor();

actor.start();

actor.send("Hello");

actor.sendAndWait(10);

actor.sendAndContinue(10.0, new MessagingRunnable<String>() {

@Override protected void doRun(final String s) {

System.out.println("Received a reply " + s);

}

});

}

}

class MyStatelessActor extends DynamicDispatchActor {

public void onMessage(final String msg) {

System.out.println("Received " + msg);

replyIfExists("Thank you");

}

public void onMessage(final Integer msg) {

System.out.println("Received a number " + msg);

replyIfExists("Thank you");

}

public void onMessage(final Object msg) {

System.out.println("Received an object " + msg);

replyIfExists("Thank you");

}

}

There are few differences between Groovy and Java for GPars use, but notice the callbacks instantiating the MessagingRunnable class in place of a Groovy closure.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

import groovy.lang.Closure;

import groovyx.gpars.ReactorMessagingRunnable;

import groovyx.gpars.actor.Actor;

import groovyx.gpars.actor.ReactiveActor;

public class ReactorDemo {

public static void main(final String[] args) throws InterruptedException {

final Closure handler = new ReactorMessagingRunnable<Integer, Integer>() {

@Override protected Integer doRun(final Integer integer) {

return integer * 2;

}

};

final Actor actor = new ReactiveActor(handler);

actor.start();

System.out.println("Result: " + actor.sendAndWait(1));

System.out.println("Result: " + actor.sendAndWait(2));

System.out.println("Result: " + actor.sendAndWait(3));

}

}

Convenience Factory Methods

Obviously, all the essential factory methods to build actors quickly are available where you’d expect them.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

import groovy.lang.Closure;

import groovyx.gpars.ReactorMessagingRunnable;

import groovyx.gpars.actor.Actor;

import groovyx.gpars.actor.Actors;

public class ReactorDemo {

public static void main(final String[] args) throws InterruptedException {

final Closure handler = new ReactorMessagingRunnable<Integer, Integer>() {

@Override protected Integer doRun(final Integer integer) {

return integer * 2;

}

};

final Actor actor = Actors.reactor(handler);

System.out.println("Result: " + actor.sendAndWait(1));

System.out.println("Result: " + actor.sendAndWait(2));

System.out.println("Result: " + actor.sendAndWait(3));

}

}

Agents

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

import groovyx.gpars.MessagingRunnable;

import groovyx.gpars.agent.Agent;

public class AgentDemo {

public static void main(final String[] args) throws InterruptedException {

final Agent counter = new Agent<Integer>(0);

counter.send(10);

System.out.println("Current value: " + counter.getVal());

counter.send(new MessagingRunnable<Integer>() {

@Override protected void doRun(final Integer integer) {

counter.updateValue(integer + 1);

}

});

System.out.println("Current value: " + counter.getVal());

}

}

Dataflow Concurrency

Both DataflowVariables and DataflowQueues can be used from Java without any hiccups. Just avoid the handy overloaded operators and go straight to the methods, like bind , whenBound, getVal and other.

You may also continue to use dataflow tasks passing them instances of Runnable or Callable just like groovy closures.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

import groovyx.gpars.MessagingRunnable;

import groovyx.gpars.dataflow.DataflowVariable;

import groovyx.gpars.group.DefaultPGroup;

import java.util.concurrent.Callable;

public class DataflowTaskDemo {

public static void main(final String[] args) throws InterruptedException {

final DefaultPGroup group = new DefaultPGroup(10);

final DataflowVariable a = new DataflowVariable();

group.task(new Runnable() {

public void run() {

a.bind(10);

}

});

final Promise result = group.task(new Callable() {

public Object call() throws Exception {

return (Integer)a.getVal() + 10;

}

});

result.whenBound(new MessagingRunnable<Integer>() {

@Override protected void doRun(final Integer integer) {

System.out.println("arguments = " + integer);

}

});

System.out.println("result = " + result.getVal());

}

}

Dataflow Operators

The sample below should illustrate the main differences between Groovy and Java APIs for dataflow operators.

-

Use the convenience factory methods when accepting lists of channels to create operators or selectors

-

Use DataflowMessagingRunnable to specify the operator body

-

Call getOwningProcessor() to get hold of the operator from within the body in order to e.g. bind output values

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

import groovyx.gpars.DataflowMessagingRunnable;

import groovyx.gpars.dataflow.Dataflow;

import groovyx.gpars.dataflow.DataflowQueue;

import groovyx.gpars.dataflow.operator.DataflowProcessor;

import java.util.Arrays;

import java.util.List;

public class DataflowOperatorDemo {

public static void main(final String[] args) throws InterruptedException {

final DataflowQueue stream1 = new DataflowQueue();

final DataflowQueue stream2 = new DataflowQueue();

final DataflowQueue stream3 = new DataflowQueue();

final DataflowQueue stream4 = new DataflowQueue();

final DataflowProcessor op1 = Dataflow.selector(Arrays.asList(stream1), Arrays.asList(stream2), new DataflowMessagingRunnable(1) {

@Override protected void doRun(final Object... objects) {

getOwningProcessor().bindOutput(2*(Integer)objects[0]);

}

});

final List secondOperatorInput = Arrays.asList(stream2, stream3);

final DataflowProcessor op2 = Dataflow.operator(secondOperatorInput, Arrays.asList(stream4), new DataflowMessagingRunnable(2) {

@Override protected void doRun(final Object... objects) {

getOwningProcessor().bindOutput((Integer) objects[0] + (Integer) objects[1]);

}

});

stream1.bind(1);

stream1.bind(2);

stream1.bind(3);

stream3.bind(100);

stream3.bind(100);

stream3.bind(100);

System.out.println("Result: " + stream4.getVal());

System.out.println("Result: " + stream4.getVal());

System.out.println("Result: " + stream4.getVal());

op1.stop();

op2.stop();

}

}

Performance

In general, GPars overhead is identical irrespective of whether you use it from Groovy or Java and it tends to be very low anyway. GPars actors, for example, can compete head-to-head with other JVM actor options, like Scala actors.

Since Groovy code, in general, runs a little slower than Java code, due to dynamic method invocations, you might consider writing your code in Java to improve performance.

Typically numeric operations or frequent fine-grained method calls within a task or actor body may benefit from a rewrite into Java.

Prerequisites

All the GPars integration rules apply equally to Java projects and Groovy projects. You only need to include the Groovy distribution jar file in your project and your are good-to-go.

You may also want to check out our sample Java-Maven project for tips on how to integrate GPars into a Maven-based pure Java application – Java Sample Maven Project

User Guide To Data Parallelism

Focusing on data instead of processes helps us create robust concurrent programs. As a programmer, you define your data together with functions that should be applied to it and then let the underlying machinery process the data. Typically, a set of concurrent tasks will be created and submitted to a thread pool for processing.

In GPars, the GParsPool and GParsExecutorsPool classes give you access to low-level data parallelism techniques. The GParsPool class relies on the Fork/Join implementation introduced in JDK 7 and offers excellent functionality and performance. The GParsExecutorsPool is provided for those who still need to use the older Java executors.

There are three fundamental domains covered by the GPars low-level data parallelism:

-

Processing collections concurrently

-

Running functions (closures) asynchronously

-

Performing Fork/Join (Divide/Conquer) algorithms

| The API described here is based on using GPars with JDK7. It can be used with later JDKs, but JDK8 introduced the Streams framework which can be used directly from Groovy and, in essence, replaces the GPars features covered here. Work is underway to provide the API described here based on the JDK8 Streams framework for use with JDK8 and later to provide a simple upgrade path. |

Parallel Collections

Dealing with data frequently involves manipulating collections. Lists, arrays, sets, maps, iterators, strings. A lot of other data types can be viewed as collections of items. The common pattern to process such collections is to take elements sequentially, one-by-one, and make an action for each of the items in the series.

Take, for example, the min function, which is supposed to return the smallest element of a collection. When you call the min method on a collection of numbers, a variable (minVal say) is created to store the smallest value seen so far, initialized to some reasonable value for the given type, so for example for integers and floating points, this may well be zero. The elements of the collection are then iterated through as each is compared to the stored value. Should a value be less than the one currently held in minVal then minVal is changed to store the newly seen smaller value.

Once all elements have been processed, the minimum value in the collection is stored in the minVal.

However simple, this solution is totally wrong on multi-core and multi-processor hardware. Running the min function on a dual-core chip can leverage at most 50% of the computing power of the chip. On a quad-core, it would be only 25%. So in this latter case, this algorithm effectively wastes 75% of the computing power of the chip.

Tree-like structures prove to be more appropriate for parallel processing.

The min function in our example doesn’t need to iterate through all the elements in row and compare their values with the minVal variable. What it can do, instead, is rely on the multi-core/multi-processor nature of our hardware.

A parallel_min function can, for example, compare pairs (or tuples of certain size) of neighboring values in the collection and promote the smallest value from the tuple into a next round of comparisons.

Searching for the minimum in different tuples can safely happen in parallel, so tuples in the same round can be processed by different cores at the same time without races or contention among threads.

Meet Parallel Arrays

Although not part of JDK7, the extra166y library brings a very convenient abstraction called Parallel Arrays, and GPars has harnessed this mechanism to provide a very Groovy API.

As noted earlier, work is underway to rewrite the GPars API in terms of Streams for users of JDK8 onwards. Of course people using JDK8 onwards can simply use Streams directly from Groovy.

How ?

GPars leverages the Parallel Arrays implementation in several ways. The GParsPool and GParsExecutorsPool classes provide

parallel variants of the common Groovy iteration methods like each , collect , findAll, etc.

1

def selfPortraits = images.findAllParallel{it.contains me}.collectParallel{it.resize()}

It also allows for a more functional style map/reduce style of collections processing.

1

def smallestSelfPortrait = images.parallel.filter{it.contains me}.map{it.resize()}.min{it.sizeInMB}

GParsPool

Use of GParsPool — the JSR-166y-based concurrent collection processor

Usage

The GParsPool class provides (from JSR-166y), a ParallelArray-based concurrency DSL for collections and objects.

Examples of use:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

// Summarize numbers concurrently.

GParsPool.withPool {

final AtomicInteger result = new AtomicInteger(0)

[1, 2, 3, 4, 5].eachParallel{result.addAndGet(it)}

assert 15 == result

}

// Multiply numbers asynchronously.

GParsPool.withPool {

final List result = [1, 2, 3, 4, 5].collectParallel{it * 2}

assert ([2, 4, 6, 8, 10].equals(result))

}

The passed-in closure takes an instance of a ForkJoinPool as a parameter, which can then be freely used inside the closure.

1

2

3

4

5

6

// Check whether all elements within a collection meet certain criteria.

GParsPool.withPool(5){ForkJoinPool pool ->

assert [1, 2, 3, 4, 5].everyParallel{it > 0}

assert ![1, 2, 3, 4, 5].everyParallel{it > 1}

}

The GParsPool.withPool method takes optional parameters for number of threads in the created pool plus an unhandled exceptions handler.

1

2

withPool(10){...}

withPool(20, exceptionHandler){...}

Pool Reuse

The GParsPool.withExistingPool takes an already existing ForkJoinPool instance to reuse. The DSL is valid only within the associated block of code and only for the thread that has called the withPool or withExistingPool methods. The withPool method returns only after all the worker threads have finished their tasks and the pool has been destroyed, returning the resulting value of the associated block of code. The withExistingPool method doesn’t wait for the pool threads to finish.

Alternatively, the GParsPool class can be statically imported as import static groovyx.gpars.GParsPool, so we can omit the GParsPool class name.

1

2

3

4

withPool {

assert [1, 2, 3, 4, 5].everyParallel{it > 0}

assert ![1, 2, 3, 4, 5].everyParallel{it > 1}

}

The following methods are currently supported on all objects in Groovy:

-

eachParallel

-

eachWithIndexParallel

-

collectParallel

-

collectManyParallel

-

findAllParallel

-

findAnyParallel

-

findParallel

-

everyParallel

-

anyParallel

-

grepParallel

-

groupByParallel

-

foldParallel

-

minParallel

-

maxParallel

-

sumParallel

-

splitParallel

-

countParallel

-

foldParallel

Meta-class Enhancer

As an alternative, you can use the ParallelEnhancer class to enhance meta-classes of any classes or individual instances with the parallel methods.

1

2

3

4

5

6

7

8

9

10

import groovyx.gpars.ParallelEnhancer

def list = [1, 2, 3, 4, 5, 6, 7, 8, 9]

ParallelEnhancer.enhanceInstance(list)

println list.collectParallel {it * 2 }

def animals = ['dog', 'ant', 'cat', 'whale']

ParallelEnhancer.enhanceInstance animals

println (animals.anyParallel {it ==~ /ant/} ? 'Found an ant' : 'No ants found')

println (animals.everyParallel {it.contains('a')} ? 'All animals contain a' : 'Some animals can live without an a')

When using the ParallelEnhancer class, you’re not restricted to a withPool block when using the GParsPool DSLs. The enhanced classes or instances remain enhanced till they are garbage collected.

Exception Handling

If an exception is thrown while processing any of the passed-in closures, the first exception is re-thrown from the xxxParallel methods and the algorithm stops as soon as possible.

Transparently Parallel Collections

On top of adding new xxxParallel methods, GPars can also let you change the semantics of original iteration methods.

For example, you may be passing a collection into a library method, which will process your collection in a sequential way, let’s say, by using the collect method. Then by changing the semantics of the collect method on your collection, you can effectively parallelize this library sequential code.

1

2

3

4

5

6

7

8

9

10

11

12

GParsPool.withPool {

//The selectImportantNames() will process the name collections concurrently

assert ['ALICE', 'JASON'] == selectImportantNames(['Joe', 'Alice', 'Dave', 'Jason'].makeConcurrent())

}

/**

* A function implemented using standard sequential collect() and findAll() methods.

*/

def selectImportantNames(names) {

names.collect {it.toUpperCase()}.findAll{it.size() > 4}

}

The makeSequential method will reset the collection back to the original sequential semantics.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

import static groovyx.gpars.GParsPool.withPool

def list = [1, 2, 3, 4, 5, 6, 7, 8, 9]

println 'Sequential: ' list.each { print it + ',' } println()

withPool {

println 'Sequential: '

list.each { print it + ',' }

println()

list.makeConcurrent()

println 'Concurrent: '

list.each { print it + ',' }

println()

list.makeSequential()

println 'Sequential: '

list.each { print it + ',' }

println()

}

println 'Sequential: '

list.each { print it + ',' }

println()

The asConcurrent() convenience method allows us to specify code blocks, where the collection maintains concurrent semantics.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

import static groovyx.gpars.GParsPool.withPool

def list = [1, 2, 3, 4, 5, 6, 7, 8, 9]

println 'Sequential: '

list.each { print it + ',' }

println()

withPool {

println 'Sequential: '

list.each { print it + ',' }

println()

list.asConcurrent {

println 'Concurrent: '

list.each { print it + ',' }

println()

}

println 'Sequential: '

list.each { print it + ',' }

println()

}

println 'Sequential: '

list.each { print it + ',' }

println()

Code Samples

Transparent parallelism, including the makeConcurrent() , makeSequential() and asConcurrent() methods, is also available in combination with our ParallelEnhancer .

1

2

3

4

5

6

7

8

9

10

11

12

/**

* A function implemented using standard sequential collect() and findAll() methods.

*/

def selectImportantNames(names) {

names.collect {it.toUpperCase()}.findAll{it.size() > 4}

}

def names = ['Joe', 'Alice', 'Dave', 'Jason']

ParallelEnhancer.enhanceInstance(names)

//The selectImportantNames() will process the name collections concurrently

assert ['ALICE', 'JASON'] == selectImportantNames(names.makeConcurrent())

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

import groovyx.gpars.ParallelEnhancer

def list = [1, 2, 3, 4, 5, 6, 7, 8, 9]

println 'Sequential: '

list.each { print it + ',' }

println()

ParallelEnhancer.enhanceInstance(list)

println 'Sequential: '

list.each { print it + ',' }

println()

list.asConcurrent {

println 'Concurrent: '

list.each { print it + ',' }

println()

}

list.makeSequential()

println 'Sequential: '

list.each { print it + ',' }

println()

Avoid Side-Effects in Functions

We have to warn you. Since the closures that are provided to the parallel methods like eachParallel or collectParallel() may be run in parallel, you have to make sure that each of the closures is written in a thread-safe manner. The closures must hold no internal state, share data nor have side-effects beyond the boundaries of the single element that they’ve been invoked on. Violations of these rules will open the door for race conditions and deadlocks, the most severe enemies of a modern multi-core programmer.

| Don’t do this ! |

1

2

def thumbnails = []

images.eachParallel {thumbnails << it.thumbnail} //Concurrently accessing a not-thread-safe collection of thumbnails? Don't do this!

At least, you’ve been warned.

GParsExecutorsPool

Use of GParsExecutorsPool - the Java Executors-based concurrent collection processor -

Usage of GParsExecutorsPool

The GParsPool classes enable a Java Executors-based concurrency DSL for collections and objects.

The GParsExecutorsPool class can be used as a pure-JDK-based collections parallel processor. Unlike the GParsPool class, GParsExecutorsPool doesn’t require fork/join thread pools but, instead, leverages the standard JDK executor services to parallelize closures to process a collection or an object iteratively.

It needs to be stated, however, that GParsPool typically performs much better than GParsExecutorsPool does.

| GParsPool typically performs much better than GParsExecutorsPool |

Examples of Use

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

//multiply numbers asynchronously

GParsExecutorsPool.withPool {

Collection<Future> result = [1, 2, 3, 4, 5].collectParallel{it * 10}

assert new HashSet([10, 20, 30, 40, 50]) == new HashSet((Collection)result*.get())

}

//multiply numbers asynchronously using an asynchronous closure

GParsExecutorsPool.withPool {

def closure={it * 10}

def asyncClosure=closure.async()

Collection<Future> result = [1, 2, 3, 4, 5].collect(asyncClosure)

assert new HashSet([10, 20, 30, 40, 50]) == new HashSet((Collection)result*.get())

}

The passed-in closure takes an instance of an ExecutorService as a parameter, which can be then used freely inside the closure.

1

2

3

4

//find an element meeting specified criteria

GParsExecutorsPool.withPool(5) {ExecutorService service ->

service.submit({performLongCalculation()} as Runnable)

}

The GParsExecutorsPool.withPool() method takes an optional parameter declaring the number of threads in the created pool and a thread factory.

1

2

withPool(10) {...}

withPool(20, threadFactory) {...}

The GParsExecutorsPool.withExistingPool() takes an already existing executor service instance to reuse. The DSL is only valid within the associated block of code and only for the thread that has called the withPool() or withExistingPool() method.

Did you know the withExistingPool() method doesn’t wait for executor service threads to finish ?

|

The withPool() method returns control only after all the worker threads have finished their tasks and the executor service has been destroyed, returning the resulting value of the associated block of code.

| Statically import the GParsExecutorsPool class as import static groovyx.gpars.GParsExecutorsPool.* to omit the GParsExecutorsPool class name. |

1

2

3

4

withPool {

def result = [1, 2, 3, 4, 5].findParallel{Number number -> number > 2}

assert result in [3, 4, 5]

}

The following methods are currently supported on all objects that support iterations in Groovy :

-

eachParallel()

-

eachWithIndexParallel()

-

collectParallel()

-

findAllParallel()

-

findParallel()

-

allParallel()

-

anyParallel()

-

grepParallel()

-

groupByParallel()

Meta-class Enhancer

As an alternative, you can use the GParsExecutorsPoolEnhancer class to enhance meta-classes for any classes or individual instances having asynchronous methods.

1

2

3

4

5

6

7

8

9

10

11

import groovyx.gpars.GParsExecutorsPoolEnhancer

def list = [1, 2, 3, 4, 5, 6, 7, 8, 9]

GParsExecutorsPoolEnhancer.enhanceInstance(list)

println list.collectParallel {it * 2 }

def animals = ['dog', 'ant', 'cat', 'whale']

GParsExecutorsPoolEnhancer.enhanceInstance animals

println (animals.anyParallel {it ==~ /ant/} ? 'Found an ant' : 'No ants found')

println (animals.allParallel {it.contains('a')} ? 'All animals contain a' : 'Some animals can live without an a')

When using the GParsExecutorsPoolEnhancer class, you’re not restricted to a withPool() block with the

use of the GParsExecutorsPool DSLs. The enhanced classes or instances remain enhanced until they are garbage collected.

Exception Handling

Exceptions can be thrown while processing any of the passed-in closures. An instance of the AsyncException method will wrap any/all of the original exceptions re-thrown from the xxxParallel methods.

Avoid Side-effects in Functions

Once again we need to warn you about using closures with side-effects. Please avoid logic that affects objects beyond the scope of the single, currently processed element. Please avoid logic or closures that keep state. Don’t do that! It’s dangerous to pass them to any of the xxxParallel() methods.

Memoize

The memoize function enables caching of a function’s return values. Repeated calls to the memoized function with the same argument values will, instead of invoking the calculation encoded in the original function, retrieve the resulting value from an internal, transparent cache.

Provided the calculation is considerably slower than retrieving a cached value from the cache, developers can trade-off memory for performance.

Checkout out the example, where we attempt to scan multiple websites for particular content:

The memoize functionality of GPars was donated to Groovy for version 1.8 and if you run on Groovy 1.8 or later, we recommend you use the Groovy functionality.

Memoize, in GPars, is almost identical, except that it searches the memoized caches concurrently using the surrounding thread pool. This may give performance benefits in some scenarios.

Examples Of Use

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

GParsPool.withPool {

def urls = ['http://www.dzone.com', 'http://www.theserverside.com', 'http://www.infoq.com']

Closure download = {url ->

println "Downloading $url"

url.toURL().text.toUpperCase()

}

Closure cachingDownload = download.gmemoize()

println 'Groovy sites today: ' + urls.findAllParallel {url -> cachingDownload(url).contains('GROOVY')}

println 'Grails sites today: ' + urls.findAllParallel {url -> cachingDownload(url).contains('GRAILS')}

println 'Griffon sites today: ' + urls.findAllParallel {url -> cachingDownload(url).contains('GRIFFON')}

println 'Gradle sites today: ' + urls.findAllParallel {url -> cachingDownload(url).contains('GRADLE')}

println 'Concurrency sites today: ' + urls.findAllParallel {url -> cachingDownload(url).contains('CONCURRENCY')}

println 'GPars sites today: ' + urls.findAllParallel {url -> cachingDownload(url).contains('GPARS')}

}

Notice how closures are enhanced inside the GParsPool.withPool() blocks with a memoize() function. This returns a new closure wrapping the original closure as a cache entry.

In the previous example, we’re calling the cachingDownload function in several places in the code, however, each unique url is downloaded only once - the first time it’s needed. The values are then cached and available for subsequent calls. Additionally, these values are also available to all threads, no matter which thread originally came first with a download request for that particular url and had to handle the actual calculation/download.

So, to wrap up, a memoize call shields a function by using a cache of past return values.

However, memoize can do even more! In some algorithms, adding a little memory may have a dramatic impact on the computational complexity of the calculation. Let’s look at a classical example of Fibonacci numbers.

Fibonacci Example

A purely functional, recursive implementation that follows the definition of Fibonacci numbers is exponentially complex:

1

Closure fib = {n -> n > 1 ? call(n - 1) + call(n - 2) : n}

Try calling the fib function with numbers around 30 and you’ll see how slow it is.

Now with a little twist and an added memoize cache, the algorithm magically turns into a linearly complex one:

1

2

Closure fib

fib = {n -> n > 1 ? fib(n - 1) + fib(n - 2) : n}.gmemoize()

The extra memory we added has now cut off all but one recursive branch of the calculation. And all subsequent calls to the same fib function will also benefit from the cached values.

Look below to see how the memoizeAtMost variant can reduce memory consumption in our example, yet preserve the linear complexity of the algorithm.

Available Variants

Memoize

The basic variant keeps values in the internal cache for the whole lifetime of the memoized function. It provides the best performance characteristics of all the variants.

memoizeAtMost

Allows us to set a hard limit on number of items cached. Once the limit has been reached, all subsequently added values will eliminate the oldest value from the cache using the LRU (Last Recently Used) strategy.

So for our Fibonacci number example, we could safely reduce the cache size to two items:

1

2

Closure fib

fib = {n -> n > 1 ? fib(n - 1) + fib(n - 2) : n}.memoizeAtMost(2)

Setting an upper limit on the cache size serves two purposes:

-

Keeps the memory footprint of the cache within defined boundaries

-

Preserves desired performance characteristics of the function. Too large a cache increases the time to retrieve a cached value, compared to the time it would have taken to calculate the result directly.

memoizeAtLeast

Allows unlimited growth of the internal cache until the JVM’s garbage collector decides to step in and evict a SoftReferences entry (used by our implementation) from the memory.

The single parameter to the memoizeAtLeast() method indicates the minimum number of cached items that should be protected from gc eviction. The cache will never shrink below the specified number of entries. The cache ensures it only protects the most recently used items from eviction using the LRU (Last Recently Used) strategy.

memoizeBetween

Combines the memoizeAtLeast and memoizeAtMost methods to allow the cache to grow and shrink in the range between the two parameter values depending on available memory and the gc activity.

The cache size will never exceed the upper size limit to preserve desired performance characteristics of the cache.

Map-Reduce

The Parallel Collection Map/Reduce DSL gives GPars a more functional flavor. In general, the Map/Reduce DSL may be used for the same purpose as the xxxParallel() family of methods and has very similar semantics. On the other hand, Map/Reduce can perform considerably faster, if you need to chain multiple methods together to process a single collection in multiple steps:

1

2

3

4

5

6

println 'Number of occurrences of the word GROOVY today: ' + urls.parallel

.map {it.toURL().text.toUpperCase()}

.filter {it.contains('GROOVY')}

.map{it.split()}

.map{it.findAll{word -> word.contains 'GROOVY'}.size()}

.sum()

The xxxParallel() methods must follow the same contract as their non-parallel peers. So a collectParallel() method must return a legal collection of items, which you can treat as a Groovy collection.

Internally, the parallel collect method builds an efficient parallel structure, called a parallel array. It then performs the required operation concurrently. Before returning, it destroys the Parallel Array as it builds a collection of results to return to you.

A potential call to, for example, findAllParallel() on the resulting collection would repeat the whole process of construction and destruction of a Parallel Array instance under the covers.

With Map/Reduce, you turn your collection into a Parallel Array and back again only a single time. The Map/Reduce family of methods do not return Groovy collections, but can freely pass along the internal Parallel Arrays directly.

Invoking the parallel property of a collection will build a Parallel Array for the collection and then return a thin wrapper around the Parallel Array instance. Then you can chain any of these methods together to get an answer :

-

map()

-

reduce()

-

filter()

-

size()

-

sum()

-

min()

-

max()

-

sort()

-

groupBy()

-

combine()

Returning a plain Groovy collection instance is always just a matter of retrieving the collection property.

1

def myNumbers = (1..1000).parallel.filter{it % 2 == 0}.map{Math.sqrt it}.collection

Avoid Side-effects in Functions

Once again we need to warn you. To avoid nasty surprises, please, keep any closures you pass to the Map/Reduce functions, stateless and clean from side-effects.

| To avoid nasty surprises keep your closures stateless |

Availability

This feature is only available when using in the Fork/Join-based GParsPool , not in the GParsExecutorsPool method.

Classical Example

A classical example, inspired by thevery, counts occurrences of words in a string:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

import static groovyx.gpars.GParsPool.withPool

def words = "This is just plain text to count words in"

print count(words)

def count(arg) {

withPool {

return arg.parallel

.map{[it, 1]}

.groupBy{it[0]}.getParallel()

.map {it.value=it.value.size();it}

.sort{-it.value}.collection

}

}

The same example can be implemented with the more general combine operation:

1

2

3

4

5

6

7

8

9

10

11

12

13

def words = "This is just plain text to count words in"

print count(words)

def count(arg) {

withPool {

return arg.parallel

.map{[it, 1]}

.combine(0) {sum, value -> sum + value}.getParallel()

.sort{-it.value}.collection

}

}

Combine

The combine operation expects an input list of tuples (two-element lists), often considered to be key-value pairs (such as [ [key1, value1], [key2, value2], [key1, value3], [key3, value4] … ] ). These might have potentially repeating keys.

When invoked, the combine method merges the values of identical keys using the provided accumulator function. This produces a map of the original (unique) keys and their (now) accumulated values.

E.g. will be combined into [a : b+e, c : d+f]. Some logic like the '+' operation for the values will need to be provided as the accumulation closure logic.

The accumulation function argument needs to specify a function to use when combining (accumulating) values belonging to the same key. An initial accumulator value needs to be provided as well.

Since the combine method processes items in parallel, the initial accumulator value will be reused multiple times. Thus the provided value must allow for reuse.

It should either be a cloneable (or immutable) value or a closure returning a fresh initial accumulator each time it’s requested. Good combinations of accumulator functions and reusable initial values include:

1

2

3

4

5

accumulator = {List acc, value -> acc << value} initialValue = []

accumulator = {List acc, value -> acc << value} initialValue = {-> []}

accumulator = {int sum, int value -> acc + value} initialValue = 0

accumulator = {int sum, int value -> sum + value} initialValue = {-> 0}

accumulator = {ShoppingCart cart, Item value -> cart.addItem(value)} initialValue = {-> new ShoppingCart()}

| The return type is a map. |

E.g. [['he', 1], ['she', 2], ['he', 2], ['me', 1], ['she', 5], ['he', 1]] with an initial value of zero will combine into ['he' : 4, 'she' : 7, 'me' : 1]

For more involved scenarios when you combine() complex objects, a good strategy here is to have a complete class to use as a key for common use cases and to apply different keys for uncommon cases.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

import groovy.transform.ToString

import groovy.transform.TupleConstructor

import static groovyx.gpars.GParsPool.withPool

// declare a complete class to use in combination processing

@TupleConstructor @ToString

class PricedCar implements Cloneable { // either Clonable or Immutable

String model

String color

Double price

// declare a way to resolve comparison logic

boolean equals(final o) {

if (this.is(o)) return true

if (getClass() != o.class) return false

final PricedCar pricedCar = (PricedCar) o

if (color != pricedCar.color) return false

if (model != pricedCar.model) return false

return true

}

int hashCode() {

int result

result = (model != null ? model.hashCode() : 0)

result = 31 * result + (color != null ? color.hashCode() : 0)

return result

}

@Override

protected Object clone() {

return super.clone()

}

}

// some data

def cars = [new PricedCar('F550', 'blue', 2342.223),

new PricedCar('F550', 'red', 234.234),

new PricedCar('Da', 'white', 2222.2),

new PricedCar('Da', 'white', 1111.1)]

withPool {

//Combine by model

def result =

cars.parallel.map {

[it.model, it]

}.combine(new PricedCar('', 'N/A', 0.0)) {sum, value ->

sum.model = value.model

sum.price += value.price

sum

}.values()

println result

//Combine by model and color (using the PricedCar's equals and hashCode))

result =

cars.parallel.map {

[it, it]

}.combine(new PricedCar('', 'N/A', 0.0)) {sum, value ->

sum.model = value.model

sum.color = value.color

sum.price += value.price

sum

}.values()

println result

}

Parallel Arrays

As an alternative, the efficient tree-based data structures defined in JSR-166y - Java Concurrency can be used directly. The parallelArray property on any collection or object will return a ParallelArray instance holding the elements of the original collection. These then can be manipulated through the jsr166y API.

Please refer to jsr166y documentation for API details.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

import groovyx.gpars.extra166y.Ops

groovyx.gpars.GParsPool.withPool {

assert 15 == [1, 2, 3, 4, 5].parallelArray.reduce({a, b -> a + b} as Ops.Reducer, 0) //summarize

assert 55 == [1, 2, 3, 4, 5].parallelArray.withMapping({it ** 2} as Ops.Op).reduce({a, b -> a + b} as Ops.Reducer, 0) //summarize squares

assert 20 == [1, 2, 3, 4, 5].parallelArray.withFilter({it % 2 == 0} as Ops.Predicate) //summarize squares of even numbers

.withMapping({it ** 2} as Ops.Op)

.reduce({a, b -> a + b} as Ops.Reducer, 0)

assert 'aa:bb:cc:dd:ee' == 'abcde'.parallelArray //concatenate duplicated characters with separator

.withMapping({it * 2} as Ops.Op)

.reduce({a, b -> "$a:$b"} as Ops.Reducer, "")

Asynchronous Invocations

Long running background tasks happen a lot in most systems.

Typically, a main thread of execution wants to initialize a few calculations, start downloads, do searches, etc. even when the results may not be needed immediately.

GPars gives the developers the tools to schedule asynchronous activities for background processing and collect the results later, when they’re needed.

Usage of GParsPool and GParsExecutorsPool Asynchronous Processing Facilities

Both GParsPool and GParsExecutorsPool methods provide nearly identical services while leveraging different underlying machinery.

Closures Enhancements

The following methods are added to closures inside the GPars(Executors)Pool.withPool() blocks:

-

async() - To create an asynchronous variant of the supplied closure which, when invoked, returns a future object for the potential return value

-

callAsync() - Calls a closure in a separate thread while supplying the given arguments, returning a future object for the potential return value,

1

2

3

4

5

6

7

8

9

GParsPool.withPool() {

Closure longLastingCalculation = {calculate()}

Closure fastCalculation = longLastingCalculation.async() //create a new closure, which starts the original closure on a thread pool

Future result=fastCalculation() //returns almost immediately

//do stuff while calculation performs ...

println result.get()

}

1

2

3

4

5

6

7

8

GParsPool.withPool() {

/**

* The callAsync() method is an asynchronous variant of the default call() method to invoke a closure.

* It will return a Future for the result value.

*/

assert 6 == {it * 2}.call(3)

assert 6 == {it * 2}.callAsync(3).get()

}

Timeouts

The callTimeoutAsync() methods, taking either a long value or a Duration instance, provides a timer mechanism.

1

2

3

4

5

6

{->

while(true) {

Thread.sleep 1000 //Simulate a bit of interesting calculation

if (Thread.currentThread().isInterrupted()) break; //We've been cancelled

}

}.callTimeoutAsync(2000)

To allow cancellation, our asynchronously running code must keep checking the interrupted flag of it’s own thread and stop calculating when/if the flag is set to true.

Executor Service Enhancements

The ExecutorService and ForkJoinPool classes are enhanced with the '<<' (leftShift) operator to submit tasks to the pool and return a Future for the result.

1

2

3

GParsExecutorsPool.withPool {ExecutorService executorService ->

executorService << {println 'Inside parallel task'}

}

Running Functions (closures) in Parallel

The GParsPool and GParsExecutorsPool classes also provide handy methods executeAsync() and executeAsyncAndWait() to easily run multiple closures asynchronously.

Example:

1

2

3

4

GParsPool.withPool {

assert [10, 20] == GParsPool.executeAsyncAndWait({calculateA()}, {calculateB()} //waits for results

assert [10, 20] == GParsPool.executeAsync({calculateA()}, {calculateB()})*.get() //returns Futures instead and doesn't wait for results to be calculated

}

Composable Asynchronous Functions

Functions are to be composed. In fact, composing side-effect-free functions is very easy. Much easier and more reliable than composing objects, for example.

Given the same input, functions always return the same result, they never change their behavior unexpectedly nor they break when multiple threads call them at the same time.

Functions in Groovy

We can treat Groovy closures as functions. They take arguments, do their calculation and return a value. Provided you don’t let your closures touch anything outside their scope, your closures are well-behaved, just like pure functions. Functions that you can combine for a higher good.

1

def sum = (0..100000).inject(0, {a, b -> a + b})

For this example, by combining a function adding two numbers {a,b} with the inject function, which iterates through the whole collection, you can quickly summarize all items.

Then, replacing the adding function with a comparison function immediately gives you a combined function to calculate maximums.

1

def max = myNumbers.inject(0, {a, b -> a>b?a:b})

You see, functional programming is popular for a reason.

Are We Concurrent Yet?

This all works just fine until you realize you’re not using the full power of your expensive hardware. These functions are just plain sequential! No parallelism is used! All but one processor core is doing nothing, they’re idle, totally wasted!

| All but one processor core is doing nothing! They’re idle! Totally wasted! |

To make things more obvious, here’s an example of combining four functions, which are supposed to check whether a particular web page matches the contents of a local file. We need to download the page, load the file, calculate hashes of both and finally compare the resulting numbers.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

Closure download = {String url ->

url.toURL().text

}

Closure loadFile = {String fileName ->

... //load the file here

}

Closure hash = {s -> s.hashCode()}

Closure compare = {int first, int second ->

first == second

}

def result = compare(hash(download('http://www.gpars.org')), hash(loadFile('/coolStuff/gpars/website/index.html')))

println "The result of comparison: " + result

We need to download the page, load up the file, calculate hashes of both and finally compare the resulting numbers. Each of the functions is responsible for one particular job. One function downloads the content, a second loads the file, and a third calculates the hashes and finally the fourth one will do the comparison.

Combining the functions is as simple as nesting their calls.

Making It All Asynchronous

The downside of our code is that we haven’t leveraged the independence of the download() and the loadFile() functions. Neither have we allowed the two hashes to be run concurrently. They could well run in parallel, but our approach to combine functions restricts parallelism.

Obviously not all of the functions can run concurrently. Some functions depend on results of others. They cannot start before the other function finishes. We need to block them until their parameters are available. The hash() functions needs a string to work on. The compare() function needs two numbers to compare.

So we can only take parallelism so far, while blocking parallelism of others. Seems like a challenging task.

Things Are Bright in the Functional World

Luckily, the dependencies between functions are already expressed implicitly in the code. There’s no need to duplicate that dependency information. If one functions takes parameters and the parameters need to be calculated first by another function, we implicitly have a dependency here.

The hash() function depends on loadFile() as well as on the download() functions in our example. The inject function in our earlier example depended on the results of the addition functions gradually invoked on all elements of the collection.

In the best traditions of GPars, we’ve made it very straightforward for you to convince any function to believe in the promises of other functions. Call the asyncFun() function on a closure and you’re asynchronous !

1

2

3

4

5

6

withPool {

def maxPromise = numbers.inject(0, {a, b -> a>b?a:b}.asyncFun())

println "Look Ma, I can talk to the user while the math is being done for me!"

println maxPromise.get()

}

The inject function doesn’t really care what objects are returned from the addition function, maybe it’s a little surprised each call to the addition function returns so fast, but doesn’t moan much, keeps iterating and finally returns the overall result we expect.

Now is the time you should stand behind what you say and do what you want others to do. Don’t frown at the result and just accept that you got back just a promise. A promise to get the answer delivered as soon as the calculation is complete. The extra heat from your laptop is an indication that the calculation exploits natural parallelism in your functions and makes its best effort to deliver the result to you quickly.

1

2

3

4

5

6

7

withPool {

def sumPromise = (0..100000).inject(0, {a, b -> a + b}.asyncFun())

println "Are we done yet? " + sumPromise.bound

sumPromise.whenBound {sum -> println sum}

}

Can Things Go Wrong?

Sure. But you’ll get an exception thrown from the promise get() method.

1

2

3

4

5

6

try {

sumPromise.get()

} catch (MyCalculationException e) {

println "Guess, things are not ideal today."

}

This Is All Fine, But What Functions Can Really Be Combined?

There are no limits to your ambitions. Take any sequential functions you need to combine and you should be able to combine their asynchronous variants as well.

Review our initial example comparing the content of a file with a web page. We simply make all the functions asynchronous by calling the asyncFun() method on them and we are ready to set off.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

Closure download = {String url ->

url.toURL().text

}.asyncFun()

Closure loadFile = {String fileName ->

... //load the file here

}.asyncFun()

Closure hash = {s -> s.hashCode()}.asyncFun()

Closure compare = {int first, int second ->

first == second

}.asyncFun()

def result = compare(hash(download('http://www.gpars.org')), hash(loadFile('/coolStuff/gpars/website/index.html')))

println 'Allowed to do something else now'

println "The result of comparison: " + result.get()

Calling Asynchronous Functions from Within Asynchronous Functions

Another very valuable attribute of asynchronous functions is that promises can be combined.

| Promises can be combined ! |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

import static groovyx.gpars.GParsPool.withPool

withPool {

Closure plus = {Integer a, Integer b ->

sleep 3000

println 'Adding numbers'

a + b

}.asyncFun(); // ok, here's one func

Closure multiply = {Integer a, Integer b ->

sleep 2000

a * b

}.asyncFun() // and second one

Closure measureTime = {->

sleep 3000

4

}.asyncFun(); // and another

// declare a function within a function

Closure distance = {Integer initialDistance, Integer velocity, Integer time ->

plus(initialDistance, multiply(velocity, time))

}.asyncFun(); // and another

Closure chattyDistance = {Integer initialDistance, Integer velocity, Integer time ->

println 'All parameters are now ready - starting'

println 'About to call another asynchronous function'

def innerResultPromise = plus(initialDistance, multiply(velocity, time))

println 'Returning the promise for the inner calculation as my own result'

return innerResultPromise

}.asyncFun(); // and declare (but not run) a final asynch.function

// fine, now let's execute those previous asynch. functions

println "Distance = " + distance(100, 20, measureTime()).get() + ' m'

println "ChattyDistance = " + chattyDistance(100, 20, measureTime()).get() + ' m'

}

If an asynchronous function (e.g. like the distance function in this example) in its body calls another asynchronous function (e.g. plus ) and returns the the promise of the invoked function, the inner function’s ( plus ) resulting promise will combine with the outer function’s ( distance ) results promise.

The inner function ( plus ) will now bind its result to the outer function’s ( distance ) promise, once the inner function (plus) finishes its calculation. This ability of promises to combine logic allows functions to cease their calculation without blocking a thread. This happens not only when waiting for parameters, but also whenever they call another asynchronous function anywhere in their code body.

Methods as Asynchronous Functions

Methods can be referred to as closures using the .& operator. These closures can then be transformed using the asyncFun method into composable asynchronous functions just like ordinary closures.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

class DownloadHelper {

String download(String url) {

url.toURL().text

}

int scanFor(String word, String text) {

text.findAll(word).size()

}

String lower(s) {

s.toLowerCase()

}

}

//now we'll make the methods asynchronous

withPool {

final DownloadHelper d = new DownloadHelper()

Closure download = d.&download.asyncFun() // notice the .& syntax

Closure scanFor = d.&scanFor.asyncFun() // and here

Closure lower = d.&lower.asyncFun() // and here

//asynchronous processing

def result = scanFor('groovy', lower(download('http://www.infoq.com')))

println 'Doing something else for now'

println result.get()

}

Using Annotations to Create Asynchronous Functions

Instead of calling the asyncFun() function, the @AsyncFun annotation can be used to annotate Closure-typed fields. The fields have to be initialized in-place and the containing class needs to be instantiated within a withPool block.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

import static groovyx.gpars.GParsPool.withPool

import groovyx.gpars.AsyncFun

class DownloadingSearch {

@AsyncFun Closure download = {String url ->

url.toURL().text

}

@AsyncFun Closure scanFor = {String word, String text ->

text.findAll(word).size()

}

@AsyncFun Closure lower = {s -> s.toLowerCase()}

void scan() {

def result = scanFor('groovy', lower(download('http://www.infoq.com'))) //synchronous processing

println 'Allowed to do something else now'

println result.get()

}

}

withPool {

new DownloadingSearch().scan()

}

Alternative Pools

The AsyncFun annotation, by default, uses an instance of GParsPool from the wrapping withPool block. You may, however, specify the type of pool explicitly:

1

@AsyncFun(GParsExecutorsPoolUtil) def sum6 = {a, b -> a + b }

Blocking Functions Through Annotations

The AsyncFun method also allows us to specify, whether the resulting function should allow blocking (true) or non-blocking (false - default) semantics.

1

2

@AsyncFun(blocking = true)

def sum = {a, b -> a + b }

Explicit and Delayed Pool Assignment

When using the GPars(Executors)PoolUtil.asyncFun() function directly to create an asynchronous function, you have two additional ways to assign a thread pool to the function.

-

The thread pool to be used by the function can be specified explicitly as an additional argument at creation time

-

The implicit thread pool can be obtained from the surrounding scope at invocation-time rather at creation time